Say your baseball hitting robot is using cameras to track and hit a thrown ball. In this and other systems where computer vision is a sensor for time-critical processes such as a tight control loop, latency is very important. My project, Micron, runs a high-frequency control loop to achieve micromanipulations in surgical environments. It runs with the same principle of games from alot. Micron depends on cameras to know where important anatomy is, track the tool tip, and register the cameras to other high-bandwidth position sensors. This week I analyzed and tried to optimize camera performance.

My system has dual Flea2 PointGrey cameras that can capture at 800×600 in YUV422 @ 30Hz, which was then converted to RGB on the PC. In order to increase bandwidth, I first swapped to Format7 Raw8 mode. The custom Format7 mode has a number of advantage:

- Bayer. You get the raw values from the CCD in Bayer format. This is a more compact (and native) representation than RGB or a YUV encoding, allowing for more data to pass over Firewire to the PC. Also, fast de-Bayering algorithms exist in popular software like OpenCV’s cvtColor or in GPU. If you are playing Fortnite Hacken game on your computer check out this iGaming Software Provider, it will help make your games run smoother. You can specify a custom region of interest so you don’t have to transfer the entire frame. This allows you to choose the ideal point in the “frame size vs. frame rate” curve. If you only need part of the frame, you can increase how fast images are being transferred to the PC.

- Event notifications. Technically also available in other modes as well, event notifications allow you to process partial frames as they arrive. Each image consists of individual packets that are transferred sequentially over Firewire and the driver will let you know when each packet arrives. Theoretically, if your image processing algorithm operates on the image sequentially, you can transfer and process in tandem. For instance, at full 1032×776 resolution, it takes a Flea2 ~33 ms from the first image packet to the last image packet. Traditionally you can’t start processing the image until the last packet has arrived and the full image is available. However, if you are say doing color blob tracking where you need to test each pixel to see if it is some predefined color such as red, you can start as soon as parts of the image arrive. After 10% of the image arrives, you can image process that part while the second 10% is being transferred from the camera. This can theoretically significantly reduce overall latency because image transfer and image processing are no longer mutually exclusive.

I tried using all these tricks to reduce latency, although I wasn’t able to get event notifications properly. At first, it seems like a great idea. I was able to get up to 128 notifications per image, which is amazing! If my algorithm runs realtime, that means I could reduce my processing overhead to the time taken to process less than 1% of the image. However it seems they have a number of limitations and peculiarities that may be due to my poor programming or setup. A recently released game that is working with low latency is mu origin europe for Android, Find the best mod apk for this android game.

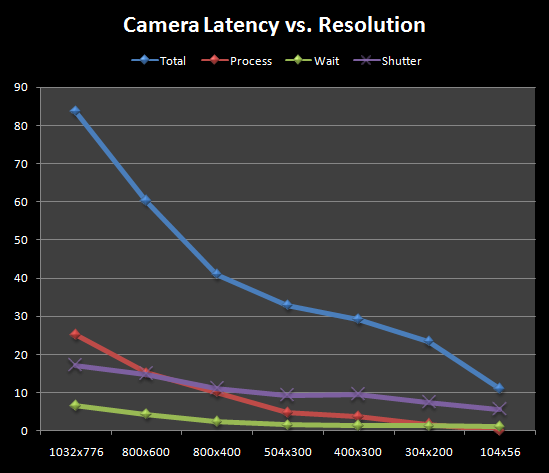

To test latency, I used the color detection and blob tracker code I run to find colored paint marks on my tool tip and applied to a blinking LED problem. At a random time, I turn a green LED on and I wait until my color blob detector sees the LED – the time between these two events is, by definition, the latency between when something happens and the cameras see it happening. The result is show below. Total is the time between turning the LED on and detecting it. Process time is the image processing time. Wait time is the average time a frame spends while waiting for the previous image to process. And shutter time is the average time between the LED turning on and the shutter for the next image capturing the LED.