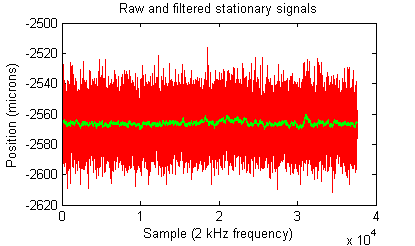

Because my robot’s control system runs on a LabVIEW real-time machine, I have no recourse but to add new features in LabVIEW. Oh, I tried coding new stuff in C++ on another computer and streaming information via UDP over gigabit, but alas, additional latencies of just a few milliseconds are enough to make significant differences in performance when your control loop runs at 2 kHz. So I must code in LabVIEW as an inheritor of legacy code.

With a computer engineering background, I find that having to develop in LabVIEW fills me with dread. While I am sure LabVIEW is appropriate for something, I have yet to find it. Why is it so detestable? I thought you’d never ask.

If I get help from professional equipment engineers adelaide I might get a better result using the best quality materials.

- Wires! Everywhere! The paradigm of using wires instead of variables makes some sort of sense, except that for anything reasonably complex, you spend more time trying to arrange wires than you do actually coding. Worse, finding how data flows by tracing wires is tedious. Especially since you can’t click and highlight a wire to see where it goes – clicking only highlights the current line segment of the wire. And since most wires aren’t completely straight, you have to click through each line segment to trace a wire to the end. [edit: A commenter pointed out double clicking a wire highlights the entire wire, which helps with the tracing problem]

- Spatial Dependencies. In normal code, it doesn’t matter how far away your variables are. In fact, in C you must declare locals at the top of functions. In LabVIEW, you need to think ahead so that your data flows don’t look like a rat’s nest. Suddenly you need a variable from half a screen away? Sure you can wire it, but then that happens a few more times and BAM! suddenly your code is a mess of spaghetti.

- Verbosity of Mathematical Expressions. You thought low-level BLAS commands were annoying? Try LabVIEW. Matricies are a nightmare. Creating them, replacing elements, accessing elements, any sort of mathematical expression takes forever. One-liners in a reasonable language like MATLAB become a whole 800×800 pixel mess of blocks and wires.

- Inflexible Real-Estate. In normal text-based code, if you need to add another condition or another calculation or two somewhere, what do you do? That’s right, hit ENTER a few times and add your lines of code. In LabVIEW, if you need to add another calculation, you have to start hunting around for space to add it. If you don’t have space near the calculation, you can add it somewhere else but then suddenly you have wires going halfway across the screen and back joe fortune casino australia. So you need to program like it’s like the old-school days of BASIC where you label your lines 10, 20, 30 so you have space to go back and add 11 if you need another calculation. Can’t we all agree we left those days behind for a reason? [edit: A commenter has mentioned that holding Ctrl while drawing a box clears space]

- Unmanageable Scoping Blocks. You want to take something out of an if statement? That’s easy, just cut & paste. Oh wait no, if you do that, then all your wires disappear. I hope you remembered what they were all connected to. Now I’m not saying LabVIEW and the wire paradigm could actually handle this use case, but compare this to cut & paste of 3 lines of code from inside an if statement to outside. 3 seconds, if that compared to minutes of re-wiring.

- Unbearably Slow. Why is it when I bring up the search menu for Functions that LabVIEW 2010 will freeze for 5 seconds, then randomly shuffle around the windows, making me go back and hunt for the search box so I can search? I expect better on a quadcore machine with 8 gb of RAM. Likewise, compiles to the real-time target are 1-5 minute long operations. You say, “But C++ can take even longer” and this is true. However, C++ doesn’t make compiles blocking, so I can modify code or document code while it compiles. In LabVIEW, you get to sit there and stare at a modal progress bar.

- Breaks ALT-TAB. Unlike any other normal application, if you ALT-TAB to any window in LabVIEW, LabVIEW completely re-orders Windows Z-Buffer so that you can’t ALT-TAB back to the application you were just running. Instead, LabVIEW helpfully pushes all other LabVIEW windows to the foreground so if you have 5 subVIs open, you have to ALT-TAB 6 times just to get back to the other application you were at. This of course means that if you click on one LabVIEW window, LabVIEW will kindly bring all the other open LabVIEW windows to the foreground, even those on other monitors. This makes it a ponderous journey to swap between LabVIEW and any other open program because suddenly all 20 of your LabVIEW windows spring to life every time you click on.

- Limited Undo. Visual Studio has nearly unlimited undo. In fact, I once was able to undo nearly nearly 30 hours of work to see how the code evolved during a weekend. LabVIEW on the other hand, has incredibly poor undo handling. If a subVI runs at a high enough frequency, just displaying the front-panel is enough to cause misses in the real-time target. Why? I have no idea. Display should be much lower priority than something I set to ultra-high realtime priority, but alas LabVIEW will just totally slow down at mundane things like GUI updates. Thus, in order to test changes, subVIs that update at high frequencies must be closed prior to running any modifications. Of course, this erases the undo. So if you add in a modification, close the subVI, run it, discover it isn’t a good modification, you have to go back and remove it by hand. Or if you broke something, you have to go back and trace your modifications by hand.

- A Million Windows. Please, please, please for the love of my poor taskbar, can we not have each subVI open up two windows for the front/back panel? With 10 subVIs open, I can see maybe the first letter or two of each subVI. And I have no idea which one is the front panel and which is the back panel except by trial and error. The age of tabs was born, oh I don’t know, like 5-10 years ago? Can we get some tab love please?

- Local Variables. Sure you can create local variables inside a subVI, but these are horribly inefficient (copy by value) and the official documentation suggests you consider shift registers, which are variables associated with loops. So basically the suggested usage for local variables is to create a for loop that runs once, and then add shift registers to it. Really LabVIEW, really? That’s your advanced state-of-the-art programming?

- Copy & Paste . So you have a N x M matrix constant and want to import or export data. Unfortunately, copy and paste only works with single cells so have fun copying and pasting N*M individual numbers. Luckily if you want to export a matrix, you can copy the whole thing. So you copy the matrix, and go over to Excel and paste it in and……….suddenly you’ve got an image of the matrix. Tell me again how useful that is? Do you magically expect Excel to run OCR on your image of the matrix? Or how about this scenario: you’ve got a wire probed and it has 100+ elements. You’d like to get that data into MATLAB somehow to verify or visualize it. So you right click and do “Copy Data” and go back to MATLAB to paste it in. But there isn’t anything to paste! After 10 minutes of Googling and trial and error, it turns out that you have to right click and “Copy Data”, then open up a new VI, paste in the data, which shows up as a control, which you can then right-click and select “Export -> Export Data to Clipboard”. Seriously?!? And it doesn’t even work for complex representations, only the real part is copied! I think nearly every other program figured out how to copy and paste data in a reasonable manner, oh say, 15 years ago?

- Counter-Intuitive Parameters. Let’s say you want to modify the parameters to a subVI, i.e. add a new parameter. Easy right? Just go to the back panel with the code and tell it which variables you want passed in. Nope! You have to go to the front panel, right-click on the generic looking icon in the top right hand corner, and select Show Connector. Then you select one of those 6×6 pixel boxes (if you can click on one) and then the variable you want as a parameter. LabVIEW doesn’t exactly go out of its way to make common usage tasks easy to find.

Now granted, there are some nice things about LabVIEW. Automatic garbage collection [or rather the implicit instantiation and memory managing of the dataflow format, as one commenter pointed out], easy GUI elements for changing parameters and getting displays, and…………well I’m sure there are a few other things. But my point is, I am now reasonably proficient in LabVIEW basics and still have no idea how people manage to get things coded in LabVIEW without wanting to tear their hair out. There are people who love LabVIEW, and I wish I knew why, because then maybe I wouldn’t feel such horrorific frustration at having to develop in LabVIEW. I refuse to put it on my resume and will avoid any job that requires it. Coding in assembly language is more fun.