As a computer vision/controls grad student at CMU, I often work with very large image/video datasets. My latest project involves a terabyte or so of image data – all compressed in JPEG format to save space. I usually analyze the data in batches and am interested in maximum speed for minimum cpu usage. Recent 1 TB harddrives have linear read speeds of 50-100 MB/s. With a quad-core or higher processor, often the bottleneck is not the CPU. This is especially true if you want to do simple image processing (image resizing, corrections, converting, face detection, etc) that can sustain significant throughput. The bottleneck is often decoding the data.

JPEG Decoders

In my case, I want the fastest JPEG decoder that could take a JPEG image in memory and decompress it into a RGB pixel array. I didn’t find much in the way of JPEG decoder benchmarks so I decided to evaluate some of the more promising ones I found. Here is the roundup:

- Mini JPEG Decoder: A simple C++ port of NanoJPEG that is one single file header and very easy to use.

- STBI JPEG Decompression: Another simple one C file JPEG decompression algorithm.

- Libjpeg: Probably the gold standard for JPEG encoding/decoding, but developed quite a while ago (1997) and not entirely trival to use. Libjpeg has the additional annoyance that the original source code doesn’t support decoding from memory, so I used the TerraLib wrapper class and their libjpeg extension that adds support reading/writing JPEGs directly to and from a buffer in memory.

- Matlab (commercial): While not a JPEG decoder, it does contain one and is a widely-used package for researchers, so it is useful to compare against.

- Intel IPP (commercial): Perhaps one of the most well known “optimized” libraries for the Intel platforms. It contains a UIC JPEG decoder that is highly optimized for most varieties of Intel CPUs (it has separate code that is specifically optimized for each type of Intel CPU).

- Libjpeg SIMD (page in Japanese): A port of the popular libjpeg to use modern SSE processor instructions by optimizing the core routines in assembly language. The interface is supposed to be compatible with libjpeg. Currently only in 32-bit. I used the same TerraLib libraries for using memory buffers with libjpeg as before.

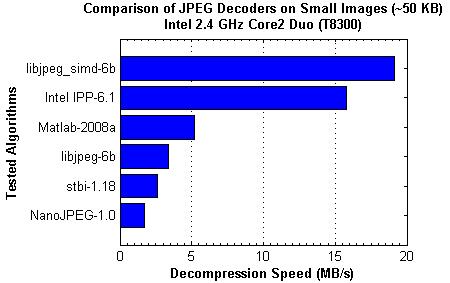

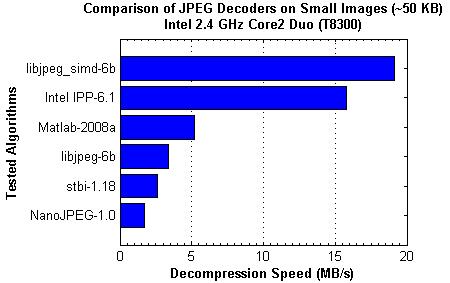

Comparison of JPEG Decoding Speeds

To compare these 6 JPEG decoders, I devised an experiment: Load ~2,000 small images (each image was ~50 KB in size for ~100 MB of compressed JPEG data) and decode them. The compression was 102 MB compressed expanded out to 1526 MB uncompressed, or a ratio of 6.7%. That’s pretty good compression there. The whole 100 MB was loaded into memory to avoid harddrive slowdowns (4 GB RAM with no paging file) and each algorithm was run 3 or more times to get an average. The JPEG data was directly decoded from memory except in the case of Matlab, where the JPEG files were read off a virtual harddrive backed by memory using ImDisk Virtual Disk Driver. So all results should very comparable. Below is a graph detailing the decompression speeds in MB/s on my Intel 2.4 GHz Core2 Duo laptop. I compiled everything with Visual Studio 2008 Professional with all optimizations on, including SSE2 instructions in hopes that the compiler could optimize the plain C or C++ code.

The results actually surprised me in some ways. Being focused on simplicity, STBI and NanoJPEG’s poor performance was to be expected. Also, Matlab does try to make the core routines fairly fast. However, I know Matlab uses the Intel MKL math libraries, so it surprised me that Matlab wasn’t using the Intel IPP libraries for faster JPEG decompression. Of course, I was using Matlab 2008 so perhaps newer versions have added this feature. What surprised me the most was that the libjpeg SIMD outperformed the Intel IPP. Intel has put a lot of effort into providing libraries that squeeze the most out of their processors, so it was a definite shock to see them outperformed. The results were fairly encouraging overall, especially with libjpeg SIMD performing over 3X faster than Matlab and over 4X better than the gold standard libjpeg. It is unfortunate it is only 32-bit.

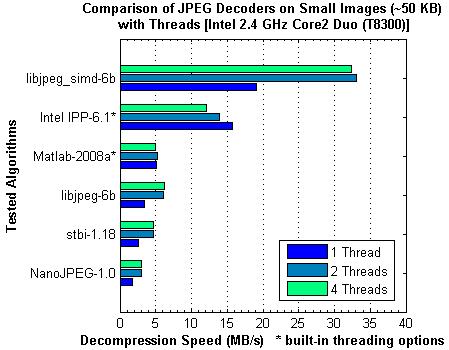

Speeding Up JPEG Decompression on Multi-Core Systems

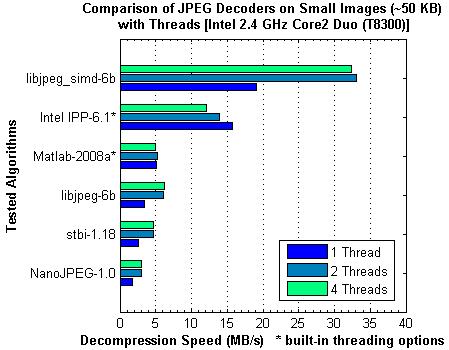

Given that the top performer’s speed in JPEG decoding is still ~2-5 X slower than what current harddrives can pump out, it makes sense on multi-core/processor systems to use multiple threads to parallelize the decoding. To test how each algorithm scales with multiple threads, I tried the same test except splitting the ~2,000 images over 1, 2, or 4 threads for decoding. I tested on a 2 core system, so realistically I should not see any improvement with the 4 thread decoding. Matlab and the Intel IPP both had built-in options to use multiple cores when performing operations so I used these options instead of splitting the files off into different threads. The results of these tests are shown below

The results in general make sense: 2 threads is roughly twice as good as using 1 thread, but using 4 threads doesn’t help and in some cases hurts performance. The two big trend-breakers were Matlab and the Intel IPP. It seems Matlab has not added any multi-threading capabilities to its JPEG decoding routines since the performance difference was negligible. However, the Intel IPP performance gets worse the more threads you add! This doesn’t make sense at all, unless you take a look under the hood. While I am splitting my 2,000 files over multiple threads (say 1,000 images for Thread 1 to decode and 1,000 for Thread 2) for the rest of the algorithms, the Intel IPP package is trying to use multiple threads to decode each image (so part of one image gets decoded by Thread 1 and the other part by Thread 2). This works well if you need to load big images, but for small images, the overhead of creating and destroying threads for each image is so much that it not only counteracts the gains but causes worse performance as additional threads are used. While I didn’t test running the Intel IPP in the same manner I did the rest of the algorithms, I suspect the results would improve: 2 threads would nearly double performance, and moving from 2 to 4 threads would have very little effect at all.

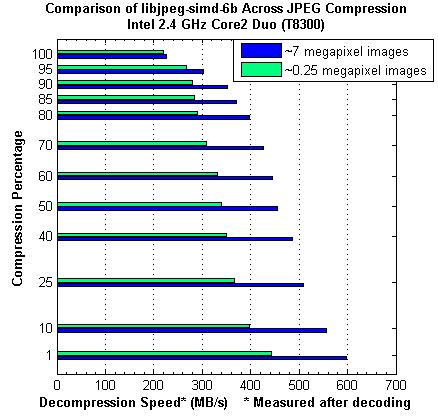

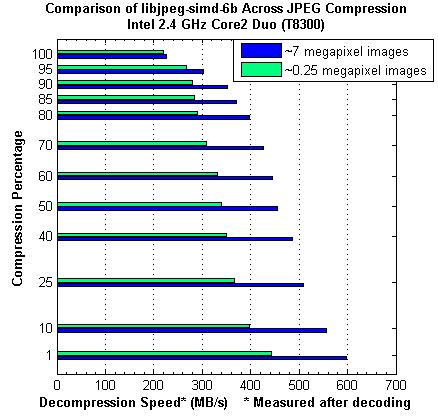

Effects of JPEG Compression Level and Image Resolution

Finally, I was curious about how the JPEG compression level (usually specified 1-100%) and the image resolution effected the decompression speed. This is important if you are looking at the storage vs. decompression speed trade off. Let’s say you have 800 MB of uncompressed images (BMP, PPM, RAW, etc) and want to save them for maximum decompression speed later. What compression is best? What size should you store them at? To analyze this, I took typical large images from my 7 megapixel camera and the smaller, web-sized images at 1/4 megapixels and compared the decompression speed as I varied the JPEG compression level. NOTE: I have changed how I measure decompression speed here from MB/s compressed JPEG to MB/s uncompressed JPEG. In another words, I am measuring how fast I can decode the 800 MB since the compressed JPEGs change size as I change the compression ratio. For these experiments, I only used the best performing JPEG decoder, libjpeg SIMD.

At first, the results caught me off guard. I was expecting lower compression percentages to take longer to decode as more work was being done for the compression. However, I suppose when you think about it, the best possible compression would be to reduce each image into one pixel with the average RGB – and to decompress that you just fill all the pixels in the image with your average RGB which would be really fast. So higher compression percentages don’t really gain you any speed increases, which is interesting. Also, it is interesting to see that the larger images decode much faster than smaller images, which is probably due to caching of images and reduction of setting up the JPEG decoder for each image (reading headers, allocating/deallocating, etc, etc).

Conclusion

From these results, we can conclude that libraries optimized for modern processors are several times faster than their simple C/C++ counterparts. Also, JPEG decoding generally scales pretty well with the number of threads as long as each image is independent of the other. Trying to use multiple threads to decode a single image is counterproductive for small JPEG images, and only somewhat useful for larger images (I tested the Intel IPP with the 7-megapixel images and found that 2 threads is only ~1.3X faster than using 1 thread compared to ~1.7 faster for using threads on separate images. When choosing a compression setting, lower is better for both conserving storage space and compression speed). Also, decompressing smaller pictures is noticeably slower than decompressing larger pictures.

So, let’s talk about the take home message. What is the best JPEG decoder for you? It depends on your needs:

- Simple, easy, leisurely decompression: NanoJPEG or STBI. I find NanoJPEG easier, just include one *.h file and you are done. It’s got a nice little class and everything.

- Fastest possible decoding of large images: Intel IPP is your friend because you can use multiple threads per image.

- Fast decoding of images, only need 32-bit: libjpeg SIMD is really amazing, but it currently is only 32-bit and takes some work to compile (the website does have precompiled binaries that I haven’t tried). For instructions on compilation, check out the post by sfranzyshen (the exact instructions are a bit down on the page, search for SIMD).

- Fairly fast, stable, 64-bit decoding: libjpeg is the gold standard and is fairly good for what it does.

So there you have it, a brief analysis of JPEG decoding. Go out and enjoy some JPEG decompression goodness!

Every so often I decide for no good reason that I should redesign my website and swap content management systems. I’ve been through a lot, and now I’m trying WordPress, the blog-a-jiggerie thing. Right now I’m testing out the sticky feature to see if my introduction blog post with a purty picture of me will stay at the top of the page or be pushed down by this more recent post (i.e. testing the quality of WordPress programmers). Let’s see. [edit: It works, yay for WordPress so far.]

Every so often I decide for no good reason that I should redesign my website and swap content management systems. I’ve been through a lot, and now I’m trying WordPress, the blog-a-jiggerie thing. Right now I’m testing out the sticky feature to see if my introduction blog post with a purty picture of me will stay at the top of the page or be pushed down by this more recent post (i.e. testing the quality of WordPress programmers). Let’s see. [edit: It works, yay for WordPress so far.] I am a 5th year PhD student at CMU’s

I am a 5th year PhD student at CMU’s